Analyzing the mountains of data created by the Huge Hadron Collider at the European laboratory CERN normally takes so considerably time that even the computer systems will need coffee. Or alternatively, Coffea — Columnar Object Framework for Powerful Assessment.

A deal in the programming language Python, Coffea (pronounced like the stimulating beverage) speeds up the examination of substantial facts sets in large-strength physics exploration. While Coffea streamlines computation, the software’s main objective is to optimize scientists’ time.

“The efficiency of a human becoming in producing scientific success is of course impacted by the equipment that you have accessible,” mentioned Matteo Cremonesi, a postdoc at the U.S. Department of Energy’s Fermi National Accelerator Laboratory. “If it can take far more than a working day for me to get a solitary amount out of a computation — which usually takes place in large-vitality physics — that’s going to hamper my performance as a scientist.”

Pissed off by the cumbersome guide get the job done they faced when writing personal computer code to evaluate LHC information, Cremonesi and Fermilab scientist Lindsey Grey assembled a staff of Fermilab scientists in 2018 to adapt chopping-edge huge data strategies to resolve the most tough queries in substantial-strength physics. Considering that then, close to a dozen study groups on the CMS experiment — one of the LHC’s two huge typical-goal detectors — have adopted Coffea for their get the job done.

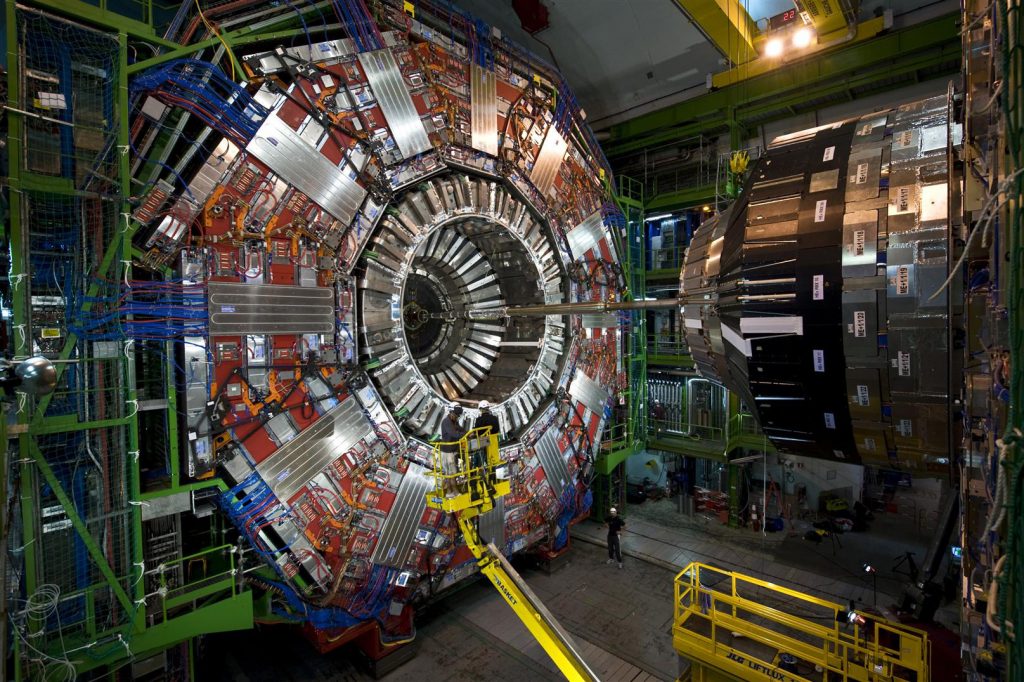

All-around a dozen investigation groups on the CMS experiment at the Big Hadron Collider have adopted the Coffea knowledge evaluation instrument for their do the job. Setting up from data about the particles generated in collisions, Coffea enables massive statistical analyses that hone researchers’ understanding of the fundamental physics, enabling more rapidly operate periods and more successful use of computing sources. Picture: CERN

Starting off from information about the particles created in collisions, Coffea permits substantial statistical analyses that hone researchers’ understanding of the fundamental physics. (Details processing amenities at the LHC have out the preliminary conversion of uncooked information into a format particle physicists can use for assessment.) A common examination on the recent LHC details set will involve processing an astounding around 10 billion particle events that can insert up to about 50 terabytes of details. Which is the info equal of around 25,000 hrs of streaming movie on Netflix.

At the coronary heart of Fermilab’s analysis resource lies a shift from a system acknowledged as occasion loop investigation to just one referred to as columnar assessment.

“You have a decision whether you want to iterate over each individual row and do an operation inside of the columns or if you want to iterate more than the operations you’re carrying out and assault all the rows at after,” described Fermilab postdoctoral researcher Nick Smith, the major developer of Coffea. “It’s type of an order-of-functions factor.”

For case in point, think about that for every single row, you want to incorporate alongside one another the quantities in three columns. In event loop assessment, you would get started by adding collectively the 3 quantities in the initially row. Then you would include together the a few numbers in the 2nd row, then transfer on to the 3rd row, and so on. With a columnar method, by distinction, you would start out by including the initially and 2nd columns for all the rows. Then you would incorporate that final result to the third column for all the rows.

“In both equally scenarios, the conclude end result would be the similar,” Smith mentioned. “But there are some trade-offs you make less than the hood, in the device, that have a big effects on efficiency.”

In information sets with quite a few rows, columnar investigation runs all-around 100 periods speedier than event loop examination in Python. Nevertheless prior to Coffea, particle physicists primarily used event loop assessment in their get the job done — even for information sets with millions or billions of collisions.

The Fermilab researchers made a decision to go after a columnar technique, but they confronted a obtrusive challenge: Substantial-electrical power physics facts can’t effortlessly be represented as a desk with rows and columns. 1 particle collision may possibly crank out a slew of muons and several electrons, when the subsequent could possibly produce no muons and a lot of electrons. Developing on a library of Python code identified as Uncomfortable Array, the staff devised a way to transform the irregular, nested structure of LHC facts into tables appropriate with columnar analysis. Usually, each and every row corresponds to 1 collision, and every column corresponds to a house of a particle designed in the collision.

Coffea’s rewards lengthen outside of a lot quicker run instances — minutes in contrast to hours or days with regard to interpreted Python code — and much more effective use of computing methods. The software package usually takes mundane coding decisions out of the arms of the researchers, allowing them to do the job on a a lot more summary stage with much less odds to make mistakes.

“Researchers are not right here to be programmers,” Smith stated. “They’re below to be information experts.”

Cremonesi, who queries for darkish make a difference at CMS, was among the the 1st researchers to use Coffea with no backup process. At first, he and the relaxation of the Fermilab crew actively sought to persuade other groups to check out the software. Now, researchers often method them inquiring how to utilize Coffea to their very own operate.

Before long, Coffea’s use will develop further than CMS. Scientists at the Institute for Exploration and Innovation in Software for Substantial Electrical power Physics, supported by the U.S. Countrywide Science Foundation, plan to incorporate Coffea into long term assessment units for both equally CMS and ATLAS, the LHC’s other substantial general-goal experimental detector. An update to the LHC regarded as the Large-Luminosity LHC, specific for completion in the mid-2020s, will file about 100 times as substantially facts, creating the successful knowledge analysis made available by Coffea even additional beneficial for the LHC experiments’ global collaborators.

In the potential, the Fermilab staff also ideas to break Coffea into a number of Python deals, enabling researchers to use just the items pertinent to them. For occasion, some experts use Coffea primarily for its histogram aspect, Gray said.

For the Fermilab scientists, the accomplishment of Coffea displays a vital shift in particle physicists’ frame of mind.

“Historically, the way we do science focuses a great deal on the components component of generating an experiment,” Cremonesi reported. “But we have attained an period in physics exploration where handling the software program element of our scientific process is just as important.”

Coffea promises to provide high-power physics into sync with recent improvements in major information in other scientific fields. This cross-pollination may possibly verify to be Coffea’s most considerably-reaching gain.

“I assume it is essential for us as a community in large-vitality physics to feel about what type of techniques we’re imparting to the persons that we’re training,” Gray mentioned. “Making confident that we as a field are pertinent to the relaxation of the entire world when it arrives to info science is a very good point to do.”

U.S. participation in CMS is supported by the Office of Electrical power Office environment of Science.

Fermilab is supported by the Place of work of Science of the U.S. Department of Electricity. The Office of Science is the solitary premier supporter of simple exploration in the actual physical sciences in the United States and is doing the job to tackle some of the most pressing issues of our time. For a lot more details, take a look at science.power.gov.